Consistent Depth of Moving Objects in Video

| 1 Google Research | 2 MIT | 3 Weizmann Institute of Science |

| | Paper | Video | Code | BibTex | |

|

|

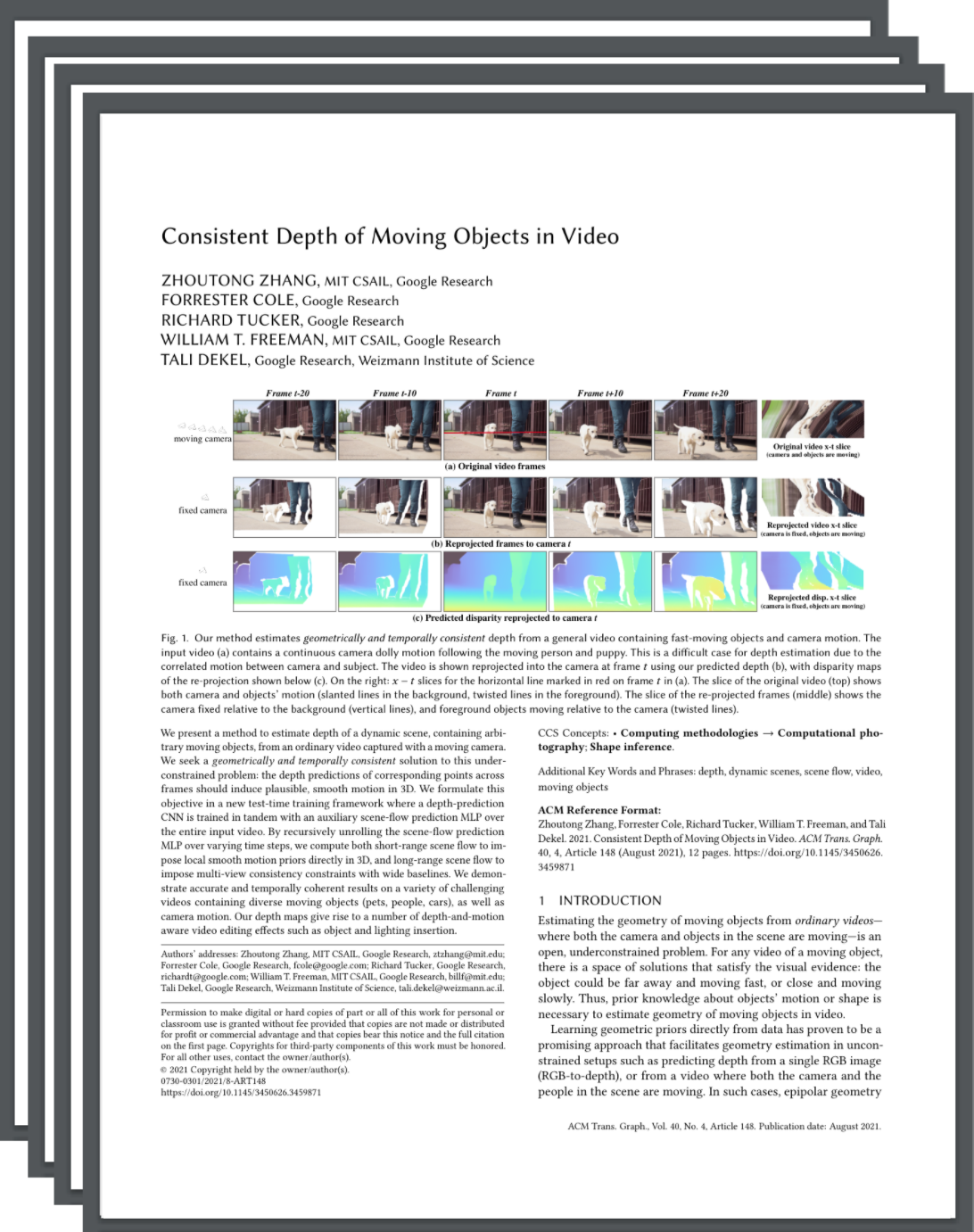

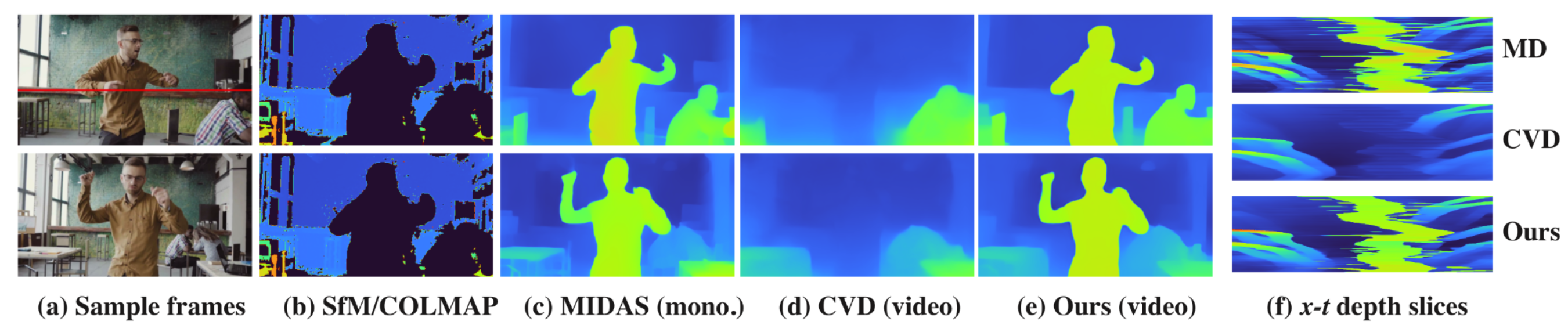

Our method estimates geometrically and temporally consistent depth from a general video containing fast-moving objects and camera motion. The input video (a) contains a continuous camera dolly motion following the moving person and puppy. This is a difficult case for depth estimation due to the correlated motion between camera and subject. The video is shown reprojected into the camera at frame t using our predicted depth (b), with disparity maps of the re-projection shown below (c). On the bottom: x-t slices for the horizontal line marked in red on frame t in (a). (d) The slice of the original video (top) shows both camera and objects' motion (slanted lines in the background, twisted lines in the foreground). The slices of the re-projected frames(e)(f) show the camera fixed relative to the background (vertical lines), and foreground objects moving relative to the camera (twisted lines). |

Abstract

We present a method to estimate depth of a dynamic scene, containing arbitrary moving objects,

from an ordinary video captured with a moving camera. We seek a geometrically and temporally consistent

solution to this underconstrained problem: the depth predictions of corresponding points across frames

should induce plausible, smooth motion in 3D. We formulate this objective in a new test-time training

framework where a depth-prediction CNN is trained in tandem with an auxiliary scene-flow prediction

MLP over the entire input video. By recursively unrolling the scene-flow prediction MLP over varying

time steps, we compute both short-range scene flow to impose local smooth motion priors directly in

3D, and long-range scene flow to impose multi-view consistency constraints with wide baselines.

We demonstrate accurate and temporally coherent results on a variety of challenging videos

containing diverse moving objects (pets, people, cars), as well as camera motion.

Our depth maps give rise to a number of depth-and-motion aware video editing effects

such as object and lighting insertion.

Video

|

5 min talk: |

Results

|

|||

|

|||

|

|||

|

|||

|

|||

|

Paper

|

Consistent Depth of Moving Objects in Video |

Supplementary Material

|

Code

|

[code] |

BibTeX

@article{zhang2021consistent,

title={Consistent depth of moving objects in video},

author={Zhang, Zhoutong and Cole, Forrester and Tucker, Richard and Freeman, William T

and Dekel, Tali},

journal={ACM Transactions on Graphics (TOG)},

volume={40},

number={4},

pages={1--12},

year={2021},

publisher={ACM New York, NY, USA}

}

Related Works